The other day commuting home on my mountain bike from work (another advantage of living in Utah), I was snapping some pictures and started thinking about the iPhone (and smart phones in general) as imaging devices. It really was not that long ago that cell phones were considered not only dramatically inferior to traditional cameras, but also unprofessional. My first exposure to this was on a photo shoot with a U.S. special forces team when I was making a panoramic shot with my iPhone. A major walked up to me and said “You taking pictures with your cell phone? That’s not very professional”.

Many non-photographers and even photographers, particularly the working professional photographers are accustomed to looking down their nose at cell phones as cameras, but if you look at the market, all of the innovation in photography has been happening with smart phones in the last couple of years. Sure, camera sensors have gotten better and less noisy, but convergent technologies are primarily happening in the smart phone market, not the camera market. On top of that, statistics show that the most common cameras are now cell phone cameras, the iPhone in particular. Flickr reports that as of this posting, the Apple iPhone 4s is the most popular camera in the Flickr Community. If you add in the iPhone 4 and then the large upswing in the newly available iPhone 5 and the now waning iPhone 3GS, you have in the iPhone platform a huge lead in the number of cameras people are using to post to Flickr.

Lots of reviews have been written, particularly starting with the iPhone 4s comparing the performance of the iPhone camera with other camera systems, so people are asking the question of how the iPhone has become a force in photography. But what everybody seems to be missing is that this is a market that Apple seems to have unwittingly redefined through the popularity of the iPhone and is either not sure of how to proceed or they are just fundamentally failing to capitalize on their advantage.

It’s important to realize that Apple generally does not work this way. Typically, Apple enters markets that it feels it can capitalize on and do something that meets a market need in a way that nobody else is doing. Using the music industry as an exemplar, before the iPod, there were companies that made MP3 music players, but Apple’s genius was realizing that the power of music on a portable music device came from a database and this is what revolutionized the music industry. In the cell phone industry, Apple’s genius was realizing that the cell phone was the ultimate expression of a portable computer driven by a touch interface and this concept proceeded to revolutionize that industry. By plan or happenstance, because Apple placed a camera on the iPhone, Apple seems to have unwittingly been dragged back into photography as the world is starting to acknowledge that the power of the camera lies in software.

Yes, I said “dragged back into photography”. For the uninitiated, Apple was one of the very first companies to produce a consumer digital camera, the Quicktake back in 1994. My first forays into digital photography were with a Quicktake 100 which I traded in for a Quicktake 200. The Quicktake had a 640×480 sensor, laughable performance in low light and perhaps… perhaps, 8 bits of dynamic range on a good day. But it was a digital camera with all of the advantages that digital provided.

The Quicktake was a digital camera with immediate results that you could then, somewhat conveniently email to others and even post to the nascent World Wide Web. This scene above was a jpg straight out of the Quicktake that was one of the very first posts for Jonesblog back in 1997 from the North rim of the Grand Canyon, made with that Apple Quicktake 200 camera. The plastic lens (manual focus with 2! manual aperture settings) and limited resolution made for images that at the time were neat and convenient, but certainly unsatisfying in terms of making an image that was faithful to the scene. 1996 was also the time when Steve Jobs returned to Apple and by later in 1997, Apple pruned back just about all of their product lines to the bare bones in an effort to keep the company alive. The Quicktake cameras were one of the first things to go along with printers, displays and other peripherals. Given Apple’s profits and prominence, its easy to forget all this and just how close Apple came to going away.

The low quality images from that Quicktake camera kept my film camera in routine use until 2004 when the 8MP Canon 20d came out which is when I dumped my film cameras pretty much entirely and put the Apple Quicktake in storage. It turns out that 2004 was pretty much the tipping point in terms of technology that made digital photography viable for professional use in the industry where digital files could start to rival the quality of film and begin to supplant the use of traditional film cameras.

Interestingly, 2004 was also when Steve Jobs started gathering a team at Apple for “Project Purple” to develop the concepts behind the iPhone. Almost three years later when the iPhone was released on January 9th, 2007, it absolutely redefined the industry and showed the world the way forward that all other companies making smart phones would copy. This was also the time when the two fields, cellular communications and digital photography were starting to come ever closer to convergence.

While there were several cameras in the early 90’s that had cellular transmission built into them (Kodak and Olympus), the first cameras in cell phones did not appear until 1997 or so. However, by 2005 Nokia had become the world’s biggest camera manufacturer by virtue of the tiny cameras embedded into their phones. These cameras were designed to take primitive images as snapshots, but their quality was so bad that most folks did not take them seriously. Technology does improve though, so two years later when the iPhone was released in 2007, it was reasonable to expect that it have a camera. Whether or not the camera was seen as a crucial portion of the iPhone strategy, I don’t know, but because the iPhone was conceptualized at Apple from the beginning as a “computer”, one could do more things with that camera than simply grab snapshots.

The market continued to evolve and by 2008, Nokia sold more cameras than Kodak and by 2010, with a billion cell phones in the market, sales of dedicated cameras began to decline. By 2011, Apple’s dominance of the cell phone industry started really gaining steam and by February 2012, Kodak stopped selling cameras altogether. Today more and more businesses and even governments are starting to adopt smartphones as the trend accelerates and cameras are found in essentially all of those smart phones sold.

The important part of this with respect to photography is that in becoming the second biggest cell phone manufacturer and certainly the leader in cell phone design has by default, brought Apple headlong into dominance of digital photography. So… The question now is: Does Apple view photography as a strategic focus? The existence of iPhoto and Aperture along with some efforts to include features like high dynamic range imagery and the panorama function in the iPhone suggest that Apple is placing some effort into photography, but given their potential impact and influence on photography, is Apple doing enough?

The quality of imagery is good and people are certainly using their iPhones to make photographs, especially with the quality of images coming out of iPhones improving dramatically over the past couple of years. Interestingly, in some circles those photographs are even starting to be used professionally. In fact, when I look at the original image files out of that 2004 era Canon 20d, the image quality looks remarkably similar to that seen in the current generation iPhone 5. Granted, the optics are going to be a huge limiting factor and if I had a choice for a camera, I’d still go with the 20d over the iPhone, but its getting close… High quality lenses really do make all the difference in the world, but you can do a tremendous job with software and it turns out there are things you can do with the iPhone that you’d never be able to do with a traditional camera.

An example is making panoramic images. A dedicated fisheye lens or high quality wide angle lens can run well into four figures. However, the iPhone panorama function in the camera makes possible beautiful panoramas or wide angle images by linking real time image processing and rapid post-processing of images right on the iPhone (Full resolution panorama of the above image here). The secret here is that the solution to not having a wide angle lens on the iPhone, you pan the iPhone and software stitches the images together in near real time.

The iPhone does not have the best camera out there in a cell phone, but at 8.0 megapixels that are also capable of 1080p Full HD video at 30 frame/s, you have a platform with a camera that Apple historically has sold over 85 million of and no sign of slowing down. This is suddenly pretty compelling when compared to that Canon 20d of 2004 that also had an 8.25 megapixel camera, but with no video and if you pushed it to ISO 3200, it made images that were no better than the images out of the iPhone 5. For example, the photo above was taken from 32,000 feet on a Delta flight from New York to Utah recently. I’ve taken many pictures from planes over the years and I’ll tell you that shooting this with the iPhone 5 was pretty close in quality to those images I used to get from a Canon 20d from the airplane.

The point at which I started taking the iPhone serious as a camera started a couple years ago with this image of the Gravity Probe B and this image captured on the way home from Idaho. The ability to rapidly and conveniently be able to document an event or time and place is the basis for Chase Jarvis‘ admonishment “the best camera is the one that’s with you” and these days it seems that people always have their cell phones with them.

So, how close are we to Apple iPhones or some other imaging product from Apple to revolutionizing the industry? An argument could be made that they already have. Whether or not Apple takes this market seriously and will become more relevant to “professional” photography is another question that depends upon their commitment and the software that Apple produces.

The painful reality for many photographers is that Canon and Nikon make lousy software… They really do. I’m actually surprised that they, like the automotive industry, have been able to get away with poor software design for so long. This software is slow, cumbersome and does not capitalize on so many potential improvements in imaging. Don’t even get me going on bad software or interface design with Sony, Olympus or Fuji… In fact, in some cases, the camera software is so bad that one might have thought that Adobe would be doing more here, perhaps working with the camera manufacturers to define software for cameras.

Yes, there have been some very cool filters and effects that Adobe has included in Photoshop, notably Content-Aware, but all of that innovation is in the post-processing and even in that arena, there has been so much more that Adobe seems reluctant to do. Managing Photoshop really has become one of interfaces and Adobe’s solution has been to fragment the Photoshop platform into Lightroom, Photoshop Elements, etc… which in some cases makes sense, but in others seems like a ridiculous approach, but it has been the path they have taken, only slipping in substantially new technology and features with glacial speed over the years. I’ve wanted additional filters for instance including FFT filters for years.

Apple has done some things better where they have chosen to write software, notably in image management software with iPhoto and Aperture, but updates to those products occur with irritating irregularity. I use Aperture even in the face of seeming lack of attention because the workflow makes sense, though Lightroom is a compelling alternative, especially with some of the post-processing features built in. That said, it seems that Apple could have done so much more here. Years ago, there was even some discussion in some circles of Apple creating a competitor for Photoshop, though the reality of the market meant that Apple needed, perhaps still needs Adobe to make Photoshop. I certainly need Photoshop, but its about time for someone to do something different and better in the image processing arena. ImageJ and Fiji work rather well in many cases, but it would be nice to have more refinement and hardware optimizations than that offered by these applications.

Fundamentally though, you are still talking about separation of the image capture with the image processing. In fact most components of photography that have historically been considered separate now need to converge and are on the verge of coalescing the photographers workflow into something more efficient. For instance, historically photographers working digitally work their cameras to capture the image they want, then they have to download those data files, manage those data-files in some sort of temporary or permanent database, add in appropriate or desired metadata like GPS coordinates and keywords, then perform any desired post-processing on those images like correcting angle, crop, color adjustment, vignetting or more dramatic modifications using software like Photoshop to manipulate the image. Then the photographer has to upload that image for publication or print it out.

We have an opportunity now to start the convergence of all of these functions…

One might expect that new technologies or technology convergence show up first in high end products like they do in the automobile or computer industries, but there are a couple of exceptions to this. The first is when companies either don’t understand or are unsure of how to appropriately capitalize on that technology and the second is when they deeply understand that technology and want to exploit that technology by getting it into as many hands as possible.

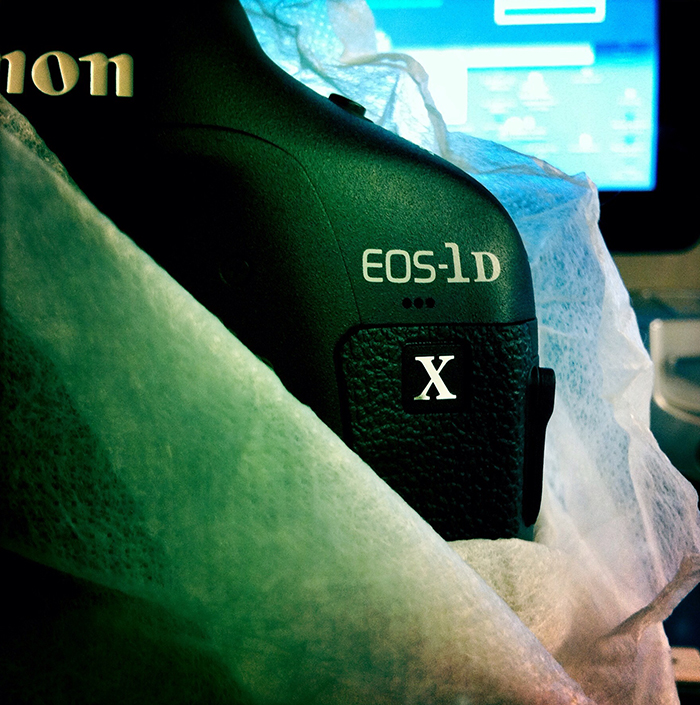

Traditional camera companies seem to be integrating new technologies at the low end of the market right now and I’d argue that it is because camera companies do not understand or know how to utilize those technologies. The camera market as a collective seems to have bet on what they know and guess that their convergence would be video in addition to still imagery, a move that I think was misguided. At the high end of the professional camera market, typified by the Canon 1DX shown above, we don’t have any of the features one might want in a convergent device, except video. There is networking, but it happens after the photos are taken unless you connect another external computer to stream them off or purchase separate WiFi networking for a few hundred dollars more to stream photographs from this camera. Fundamentally though, the flagship Canon 1DX and all other professional cameras that can network like the Nikons, perform this networking awkwardly and then only after an additional add on devices. The same is true for GPS. The 1DX can do that as well, but only after another $250 and an unwieldy add on. Its true that cameras are starting to implement GPS internally and even WiFi, but interestingly, it is coming at the low end or consumer side of things rather than the professional end.

However, technology convergence is where the iPhone is perfectly situated and where it is excelling. These convergent features are things that the iPhone does so much better than traditional cameras, even professional SLR’s. The iPhone has built in GPS, built in digital compass, a magnificent display screen, built in WiFi, Bluetooth, stereo output, all things that the range-topping product from Canon or Nikon do not have. Apple’s iCloud service even does the file handling, backup and storage in a remarkably slick manner.

Apple’s approach with most of the interface software for image capture however, seems to have been to leave all software development up to the community of developers which is not such a bad decision for individual apps, but perhaps not great for complete workflows which holds Apple back somewhat in its more widespread adoption as gear that is used professionally. As it stands, there are now hundreds of apps out there that take advantage of the imaging capabilities of the iPhone, some of them quite good that have become part of my everyday photographic toolkit. Apps like my new favorite black and white photography app, Hueless (thanks Xeni), the old standby Hipstamatic and a few others. These apps combine instant image processing with the image capture process and even interface to some extent with the current iPhone workflow that saves files to common directories. What I would prefer however, is to have all of these apps write out a common RAW image along with the transformed image and handle it like Aperture with masters and modified images. Its theoretically possible for the iPhone to write out RAW imagery as well as providing a parallel stream to handle image operations like it does with the standard and HDR image. For instance the 3 images below were the ones that inspired this whole post, captured on the bike ride home through some single track. They are three separate images of the same scene shot within a minute or two of each other. *It would have been nice though to capture them all at once in a RAW format and then have the apps as separate image filter functions that could be applied in an Aperture for iPhone manner.

This image of the singletrack on the East bench of Salt Lake City was shot with the standard iPhone camera as the sun set behind the Oquirrh mountains on the other side of the valley.

The same scene above, yet this time shot with Hipstamatic.

The same scene above, yet this time shot with Hueless. A hiker had strolled in the field of view by the time I snapped this third image.

In short, we are rapidly approaching a point where Apple has many of the required tools in their workflow to redefine how photography works for professionals as well as consumers. There are some professional photographers that have embraced smart phones in their workflow and some like my friend Trent are even teaching classes in college on photography with smart phones. That said, while it is true that while the iPhone is being used professionally by some in limited capacities, right now the smart phone is nowhere near a replacement for SLR camera systems as it does not have the dynamic range of the current generation of SLRs or all of the manual options to select aperture, shutter speed, etc… The iPhone does not have optical zoom and by virtue of its very small sensor (size of a pin head), high ISO images cannot compete. Additionally, the iPhone cannot perform weighted metering and deal with more complex lighting for instance. But this is all conceivably possible. How cool would it be to set up a Strobist style photoshoot, controlling all of your lighting from the iPhone?

The iPhone technology convergence is showing the world the way forward in terms of how to integrate technology into photography. It remains to be seen whether Apple will capitalize on this or how soon camera companies will begin to adopt strategies pioneered by the smart phone industry.

* One can discuss what this means for photojournalism and what is reality, but that is another post. If you are interested, look into the furor created when Damon Winter notably used an iPhone and Hipstamatic to document “A Grunt’s Life”, winning third prize for his New York Times piece.

Great write up. I love the Nikon D800 output, but the add-ons and menus make for some clunky workflow at times. Intuitive software and innovations like “Tap to Focus/Exposure” would be interesting to try on an SLR in live view. That aside, I shoot a great deal on both my iPhone and my SLR, while my point-and-shoots are in storage somewhere…

How cool would it be to do other things with your camera, like set up your intervalometer or use the built in CPU to capture derivative imagery like… only showing the pixels that change over multiple exposures? So much is possible that it boggles the mind as to what the camera manufacturers have been missing…

Have you read Makers by Cory Doctorow? The first half is very near future, it focuses on a journalist who uses her phone for all professional video/photo needs. It works pretty. Although the primary focus of the novel is on 3d printing and what it can achieve in society, it’s also about convergence on a level that we’re already starting to see today.

As a professional producer (short form documentary, some live production) I’ve begun using SLRs for video. I’ve found the output quality from a sub-$2k consumer SLR camera surpasses what I was getting from our $5-10k video cameras just a couple of years ago. With an external audio recorder and mic preamp it’s even better.

I’m extremely excited about the future!

I’ve not read Makers yet. As to video, yes… I absolutely agree that video was a natural extension of still photography, but its still pretty “safe” in terms of what cameras can do. Its also a completely different workflow with requirements for infrastructure beyond what people doing still photography have in many cases. What would be nice is some real out of the box thinking from camera manufacturers on interface and additional convergence that would deliver *more*.

I still don’t have an iPhone, but phones in general have definitely come a long way since my clunky W810i and that’s of course in a very large part because of what Apple came out with.

Here’s something I saw advertised recently (http://www.gizmodo.com.au/2012/11/will-i-ams-new-phone-case-worse-than-his-band/). I can’t say much about the way it looks or how well it actually works. Gizmodo doesn’t talk about this particular “case” very favorably. But it looks like there are ways to improve on the built-in camera with add-ons, including even zoom lenses (as I am sure you as a frequent flyer have seen advertised inside Skymall). No surprise there are many companies getting in on this when the iPhone is so popular as a camera and a phone.

Heh… Yeah, I saw that case. On the one hand its so blingy that it is easy to dismiss as absurd, but the future of photography is convergence. I don’t think Wil I am or whatever his name is has it completely right here, but it is interesting.

O.K., yeah, I think I see where this is going…!!!

Here’s another minor narrative in support of your thesis: Another pro-photographer friend has noted how phone cameras seem less intrusive to people/subjects. For example, he told how a street musician in New Orleans recoiled when a full-on camera was pointed but said nothing when an iphone was held up instead…

Yeah, particularly when you have a big-assed white Canon 70-200 f/2.8 with the lens hood poking at them. This is why it helps often with street photography to have something less obtrusive. Trent Nelson (linked above) uses his iPhone for street photography *all the time* and gets great stuff from it.

Oddly enough what springs to mind is Parrot’s clever AR Drone. They offloaded all their user interface onto the iPhone platform. (And later to Android as well I think.) Assuming the end user already had a smartphone let them focus on the flight hardware.

I wonder if some camera maker might take a similar approach? I can envision someone producing a barebones SLR camera that focuses on only sensor and optics, while offloading the whole software interface to a smartphone app via wifi. (They’d have to do something pretty clever to avoid a storage bottleneck, but the rest seems pretty straightforward.) The obvious thing to do is snap the smartphone to the camera as a digital viewfinder, but I suspect there are even more interesting possibilities if it remains detached.

A standardized interface would be interesting indeed…